Twitter Algorithm: A Dangerous Weapon in the Hands of the Manipulators

In today's digital age, social media has become an integral part of our daily lives. Among them, Twitter stands out as a platform that enables users to express their opinions, engage with others, and get informed about the latest news and events worldwide.

However, with great power comes great responsibility, and Twitter's algorithm is no exception. In recent years, there have been growing concerns about the misuse of the Twitter algorithm to manipulate and hurt users' accounts' reputations without any recourse.

The discovery of the CVE-2023-29218 vulnerability in Twitter's code is a reminder that online platforms are not invulnerable to cyberattacks.

The response from Twitter CEO Elon Musk, offering a bounty for the conviction of those behind the botnets exploiting the vulnerability, highlights the seriousness of the situation.

It is a reminder that cybersecurity is a shared responsibility and that we all have a role to play in protecting ourselves and our communities.

This article sheds light on the potential dangers of the Twitter algorithm as a manipulative tool and highlights the growing concerns about the hurt users account reputations.

How the Twitter Algorithm Can Be Exploited

Twitter's algorithm chooses tweets based on a collection of core models and features that extract latent information from tweet, user, and engagement data.

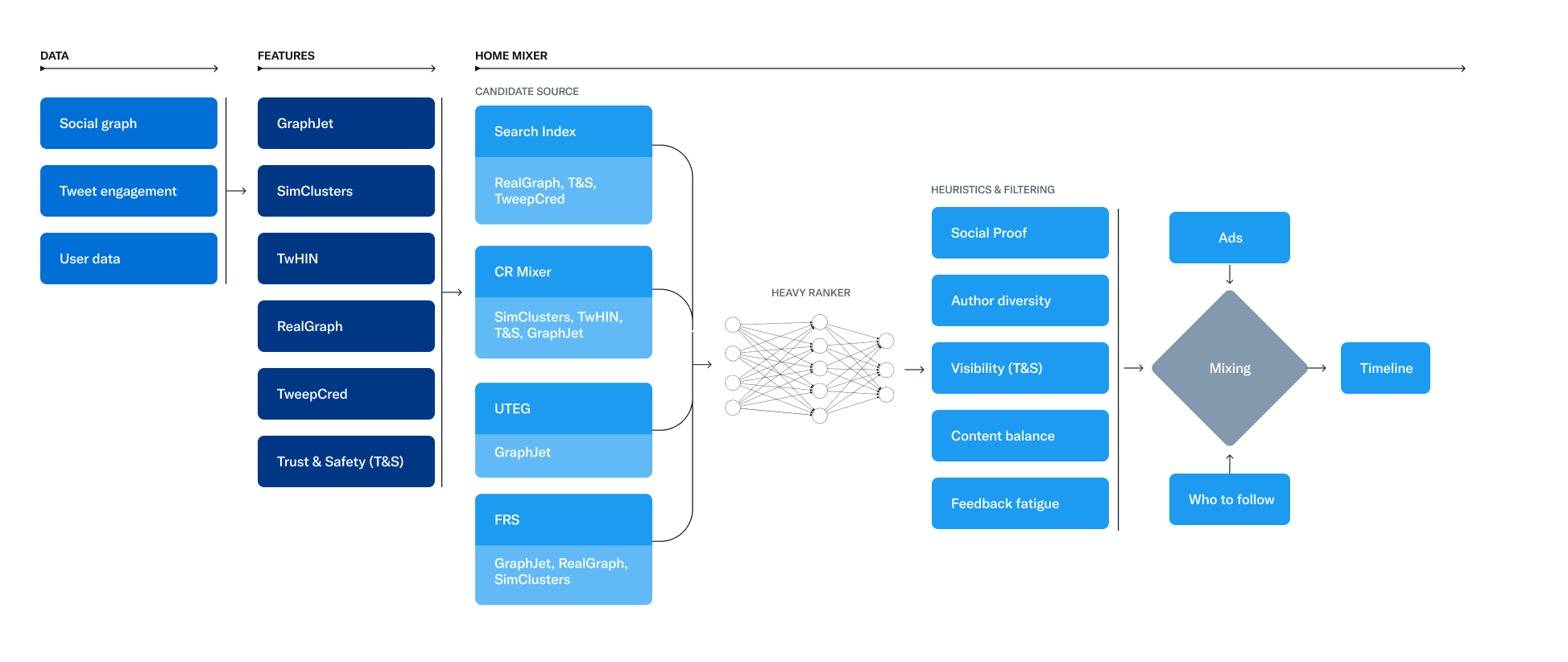

Twitter's timeline involves three major stages in the recommendation pipeline: candidate sourcing, machine learning algorithm ranking, and filters and heuristics usage.

The service in charge of creating and delivering the schedule is Home Mixer, which is the technological framework that links various potential sources, scoring formulas, heuristics, and filters.

The Twitter algorithm uses in-network and out-of-network sources to select the top 1500 tweets for each request from a group of hundreds of millions.

The embedding space methods create numerical representations of users’ interests and Tweets’ substance to respond to the more broad inquiry about content similarity.

The timeline’s objective is to provide pertinent Tweets by ranking them based on scoring, which serves as a direct predictor of each prospective Tweet’s relevance.

The problem with the current implementation of the Twitter algorithm is that all penalties are applied at the account level, regardless of the content's nature. This means that even if the reported content is entirely legitimate, the account owner still suffers the consequences.

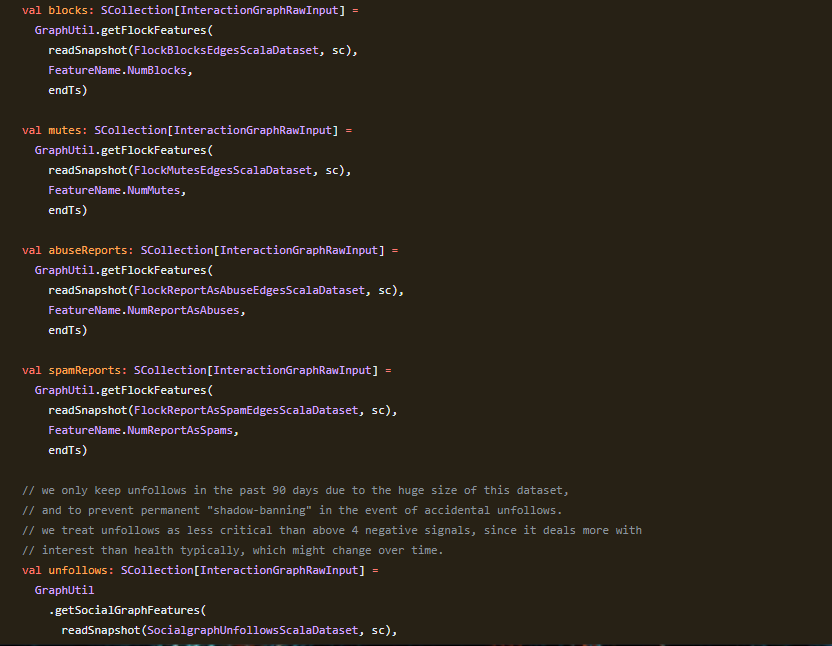

The process of exploiting the Twitter algorithm is quite straightforward. First, a group of users with similar views needs to be organized. They then find a target and execute the following tasks in order: follow in preparation, unfollow a few days later, report a few "borderline" posts, mute, and block.

This coordinated effort results in a global penalty being applied to the target's account, significantly hurting its reputation and visibility.

The Vulnerabilities in the Twitter Algorithm

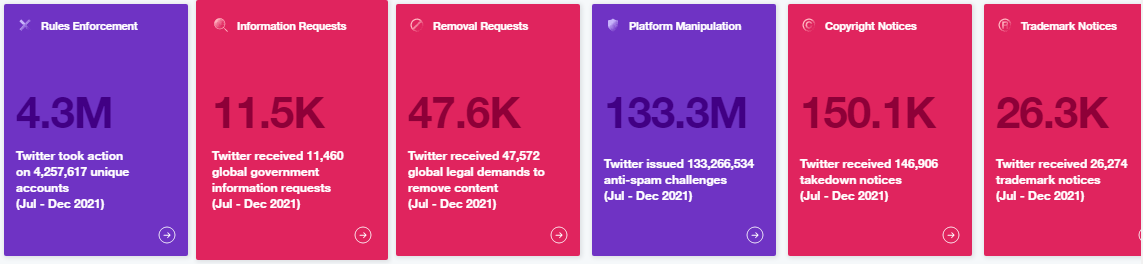

Twitter, one of the world's most popular social media platforms, has recently been hit with a new security vulnerability, CVE-2023-29218, which allows attackers to manipulate its recommendation algorithm using mass blocking actions from multiple bot-created accounts.

This flaw has the potential to suppress specific users from appearing in people's feeds by artificially driving down their reputation scores, causing a denial of service.

Moreover, the penalties accumulate over time and survive the actual tweet, making them even more dangerous. No matter how much the account owner tries to boost their visibility, with enough people applying enough signals, the multiplier gets incredibly low, making it impossible to reverse the damage.

To make matters worse, there are apps and websites like BlockParty, Reddit's GamerGhazi, and BlockTogether that enable users to build, organize, and weaponize this behavior. BlockTogether, for instance, had 303,000 registered users, with 198,000 users subscribing to at least one list and 4.5 billion actions.

"The Twitter Recommendation Algorithm through ec83d01 allows attackers to cause a denial of service (reduction of reputation score) by arranging for multiple Twitter accounts to coordinate negative signals regarding a target account, such as unfollowing, muting, blocking, and reporting, as exploited in the wild in March and April 2023."

Twitter's recommendation algorithm uses machine learning to suggest content to users. The algorithm takes into account various factors such as a user's activity, who they follow, and what they interact with. It then suggests content to the user based on these factors.

The vulnerability lies in the algorithm's implementation. Twitter's algorithm takes into account negative signals such as unfollows, muting, and blocking as a factor in determining a user's reputation score. This means that if multiple accounts coordinate negative actions against a target account, it can artificially drive down the user's reputation score, resulting in the user's content being suppressed from appearing in Twitter's recommendation engine.

This vulnerability could be dangerous for users, as it can be exploited to manipulate the algorithm and artificially suppress a user's content. This could result in significant harm to the user's reputation, online presence, and potentially their career.

As a result, the user's content would be suppressed from appearing in people's feeds and the recommendation engine, ultimately harming the user's online presence and potentially leading to a loss of followers and engagement.

The vulnerability was caused by a bug in the recommendation algorithm's code, which allowed negative actions to be coordinated and amplified by botnet armies. The code was identified as ec83d01, and the flaw has since been patched by Twitter.

The problem: Blocking large numbers of people on social media reduces diversity in discussion.

To address this problem, one possible solution is to implement a reputation-based system that rewards users with high credibility and penalizes those with low credibility.

The algorithm could take into account various factors such as the age of the account, the frequency of posts, the number of followers, the number of likes and retweets, and the engagement rate. Users with high credibility scores would receive a boost in their posts' visibility, while users with low credibility scores would experience a downgrade.

To further discourage the creation of fake accounts, the algorithm could also impose a penalty on accounts that are suspected of being fake or engaging in malicious activities.

For instance, accounts that are reported by multiple users or flagged by automated systems could receive a temporary ban or a permanent suspension.

Here's an example of how the algorithm could work:

- User A has an account that is one year old, has 5,000 followers, and averages 100 likes and 50 retweets per post. User A's engagement rate is calculated as (100+50)/(5,000)=0.03, and the credibility score is assigned as 80 out of 100.

- User B has an account that is one month old, has 100 followers, and averages 1 like and 0 retweets per post. User B's engagement rate is calculated as (1+0)/(100)=0.01, and the credibility score is assigned as 20 out of 100.

- User C has an account that is suspected of being fake, has 10,000 followers, and averages 1,000 likes and 500 retweets per post. User C's engagement rate is calculated as (1,000+500)/(10,000)=0.15, but the credibility score is penalized as 30 out of 100 due to suspicious activity.

In this example, User A would receive a small boost in their posts' visibility, User B would receive a significant downgrade, and User C would receive a severe penalty. This algorithm could incentivize users to create authentic and credible accounts and discourage malicious behavior on Twitter.

Twitter Takes Control of Its Platform with New House-Keeping Initiatives

As a leading social media platform, Twitter has a responsibility to protect its users from abuse, harassment, and manipulation. The company must take proactive steps to address the vulnerabilities in its algorithm and ensure that it cannot be exploited to hurt users' accounts' reputations.

This includes implementing measures to prevent coordinated attacks, improving the transparency of the algorithm, and providing users with more control over their accounts' visibility.

Twitter is not the only tech giant that has faced criticism for its algorithmic practices. Algorithmic bias has been a hot topic in recent years, with many calling for greater transparency and accountability in the algorithms used by tech companies.

Google has also faced criticism for its algorithmic practices. In 2020, a study found that Google's search algorithm prioritized its own products and services over those of its competitors. This led to an antitrust lawsuit being filed against Google by the U.S. Department of Justice.

Similarly, Facebook has faced criticism for its algorithmic practices. The company has been accused of amplifying extremist content and allowing false information to spread. In response, Facebook has made changes to its algorithm to prioritize content from reputable sources.

Conclusion

In conclusion, the Twitter algorithm can be a powerful tool for users to engage with others, express their opinions, and get informed about the latest news and events. However, it can also be a dangerous weapon in the hands of manipulators who seek to hurt, silence, or undermine other users' accounts.

"https://github.com/twitter/the-algorithm"

If you're interested in learning more about the technical aspects of these solutions or need assistance with cybersecurity practices, be sure to reach out to the experts at Cyberstanc. Our team of professionals can provide you with the latest information and best practices for online safety and security. You can contact at [email protected] for more information.

Together, we can make social media a safer and more productive space for everyone. Let's continue to work towards a brighter and more secure digital future.